These days it seems like everyone knows about ‘black swans.’ Natalie Portman and Mila Kunis aside, Nassim Nicholas Taleb used it as the title for his 2007 book about “low probability, high impact” events to which, he argued, the human mind is especially vulnerable. But ‘black swans’ have an older role in debates about the philosophy of science (as Taleb, a self-described epistemologist, certainly knew) and, thus, relevance for International Relations, a discipline that often aspires to (social) scientific status.

In Karl Popper’s Logic of Scientific Discovery (1934) (a seminal work in the philosophy of science) black swans play a role in tackling the so-called ‘problem of demarcation,’ of how to tell science from pseudo-science. In response to the longstanding principle of ‘verifiability’—which held that the test of a scientific statement was whether or not it could be empirically verified – Popper proposed the principle of ‘falsifiability’ – which held that the test of a scientific statement was, instead, whether it could, in principle, be shown to be false. While verification was fine ammunition against many of the modernist mythologies of the age, it had its problems. It is almost impossible, for example, to verify many observable law-like regularities; indeed, verificationism would have us witness every apple fall from every tree before we could accept the laws of gravity as science. For Popper, the body of scientific knowledge should instead consist of those statements that have not yet been falsified; or, to use his example (and this is where swans come in): we can say scientifically that “all swans are white,” — and be content with that as the state of scientific knowledge — 1) only until we in fact discover, and, crucially, 2) because we understand in advance the possibility of discovering, that falsifying “black swan.”

Now, this is certainly an advance over shaking all the world’s apple trees before coming to a decision about Newtonian mechanics. But falsifiability has its problems too — and especially for the social sciences. As it turns out, (as Mark Blaug wrote, in an influential article) “virtually all social phenomena are stochastic in nature, in which case an adverse result implies the improbability of the hypothesis being true, not the certainty that it is false. To discard a theory after a single failure,” therefore, “amount[s] to intellectual nihilism.” As a result, it becomes a matter of some importance to determine (in the presence of adverse results) whether and when a theory or statement has been falsified.

Cue Imre Lakatos, who tried to solve this problem by looking at schools of thought or ‘research programs’ as a whole and asking whether, in general, they were ‘progressing’ or ‘degenerating.’ Because of what he termed the ‘principle of tenacity’– or “the tendency of scientists to evade falsification of their theories by the introduction of suitable ad-hoc auxiliary hypotheses” — Lakatos stipulated that “auxiliary hypotheses must advance knowledge rather than simply preserve the old theory”; they must “predict some novel, hitherto unexpected fact” and “[be] actually corroborated, giving the new theory excess empirical content over its rival” (Blaug, 1975; Vazquez, 1997). Only if a research program was ‘progressing’ could it be understood as not yet having been falsified and so ‘scientific.’

One of the most serious criticisms of the neo-realist school of thought in recent years is that it may meet the definition of a degenerating research program. In 1997, John Vasquez asked the question explicitly in his article, “The Realist Paradigm and Degenerative versus Progressive Research Programs.” For more detail on this, see the exchanges between Vazquez, Paul Schroeder (Historical Reality vs. Neo-realist Theory), Colin and Miriam Elman (A Reply to Vazquez; A Reply to Schroder), and Kenneth Waltz himself (Structural Realism After the Cold War).

Though the debate has covered diverse terrain since it first flared up, the source of the problem for neo-realism is that the end of the Cold War may have represented the kind of ‘black swan’ event that Popper (not Taleb) was talking about. If, as Waltz argued, bipolar systems are the most stable, the sudden collapse of the bipolar Cold War dynamic very much seems like an adverse result that begs the falsification question. More than twenty years later, neo-realist theory has produced a mobile army of emendations and auxiliary hypotheses, but the question has not gone away.

Reading Davide Fiammenghi’s article in the Spring Issue of International Security — “The Security Curve and the Structure of International Politics” — this is the question that springs most to mind. Fiammenghi deals with the familiar issue of the relationship between power (“a state’s aggregate capabilities”) and security (“a state’s probability of survival”). Though, as he tells us, debate over the relationship between power and security is at least as old Demosthenes and Thucydides, there is no consensus today even among realists: in short, ‘offensive realists’ like John Mearsheimer argue that security increases as power increases; ‘defensive’ realists’ like Barry Posen or Charles Glaser argue that the accumulation of power can diminish security; and ‘unipolar realists’ like William Wohlforth argue that the concentration of power in one state promotes the security of all states in the system.

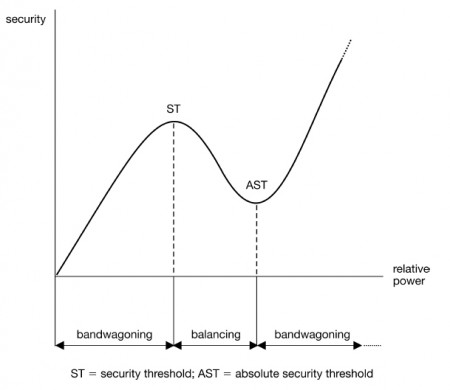

According to Fiammenghi, the solution to this lack of consensus is what he terms the “Security Curve,” which describes a “modified parabolic relationship between power and security that has three stages” :

As Fiammenghi writes: “in the first stage, any increase in a state’s power represents an increase in its security, because states with more power can recruit allies and deter rivals. In the second stage, further increases in power begin to diminish the state’s security, because the ongoing accumulation of capabilities causes allies to defect and opponents to mobilize. In the third stage, the state has amassed so much power that opponents have no choice but to bandwagon.”

This very neatly and conveniently ties up these three strands of neo-realism, but the initial suspicion is whether it isn’t a bit too convenient. Unfortunately this is not the venue for a detailed engagement with the many nuances of Fiammenghi’s article — for that, we may have to wait for another issue of International Security. And for any definitive statements about the ‘scientific’ status of neo-realism, we may have to wait much longer. But, to me, Fiammenghi’s article seems better at stitching together theoretical inconsistencies rather than explaining the world. For now, let’s simply pose the question: what “excess empirical content” does the Security Curve provide, what “novel, hitherto unexpected” facts does it explain?